repo

Colab notebook

Fine-tuning Electra and interpreting with Captum Integrated Gradients

This notebook contains an example of fine-tuning an Electra model on the GLUE SST-2 dataset. After fine-tuning, the Integrated Gradients interpretability method is applied to compute tokens' attributions for each target class.

- We will instantiate a pre-trained Electra model from the Transformers library.

- The data is downloaded from the nlp library. The input text is tokenized with ElectraTokenizerFast tokenizer backed by HF tokenizers library.

- Fine-tuning for sentiment analysis is handled by the Trainer class.

- After fine-tuning, the Integrated Gradients interpretability algorithm will assign importance scores to

input tokens. We will use a PyTorch implementation from the Captum library.

- The algorithm requires providing a reference sample (a baseline) since importance attribution is performed based on the model's output, as inputs change from reference values to the actual sample.

- The Integrated Gradients method satisfies the completeness property. We will look at the sum of attributions for a sample and show that the sum approximates (explains) prediction's shift from the baseline value.

- The final sections of the notebook contain a colour-coded visualization of attribution results made with captum.attr.visualization library.

The notebook is based on the Hugging Face documentation and the implementation of Integrated Gradients attribution methods is adapted from the Captum.ai

Interpreting BERT Models (Part 1).

Visualization

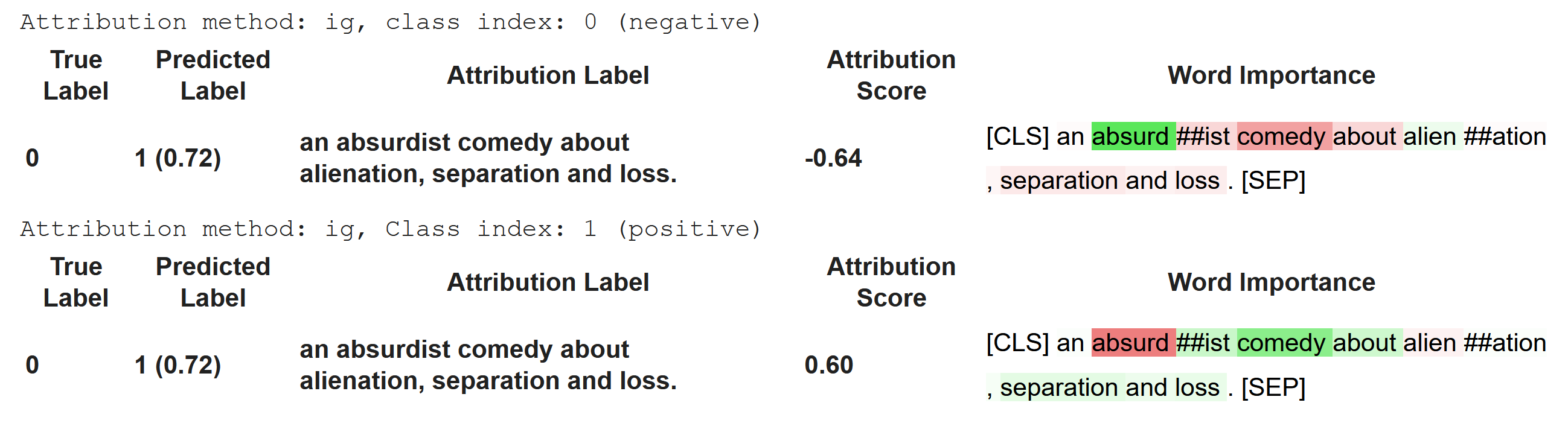

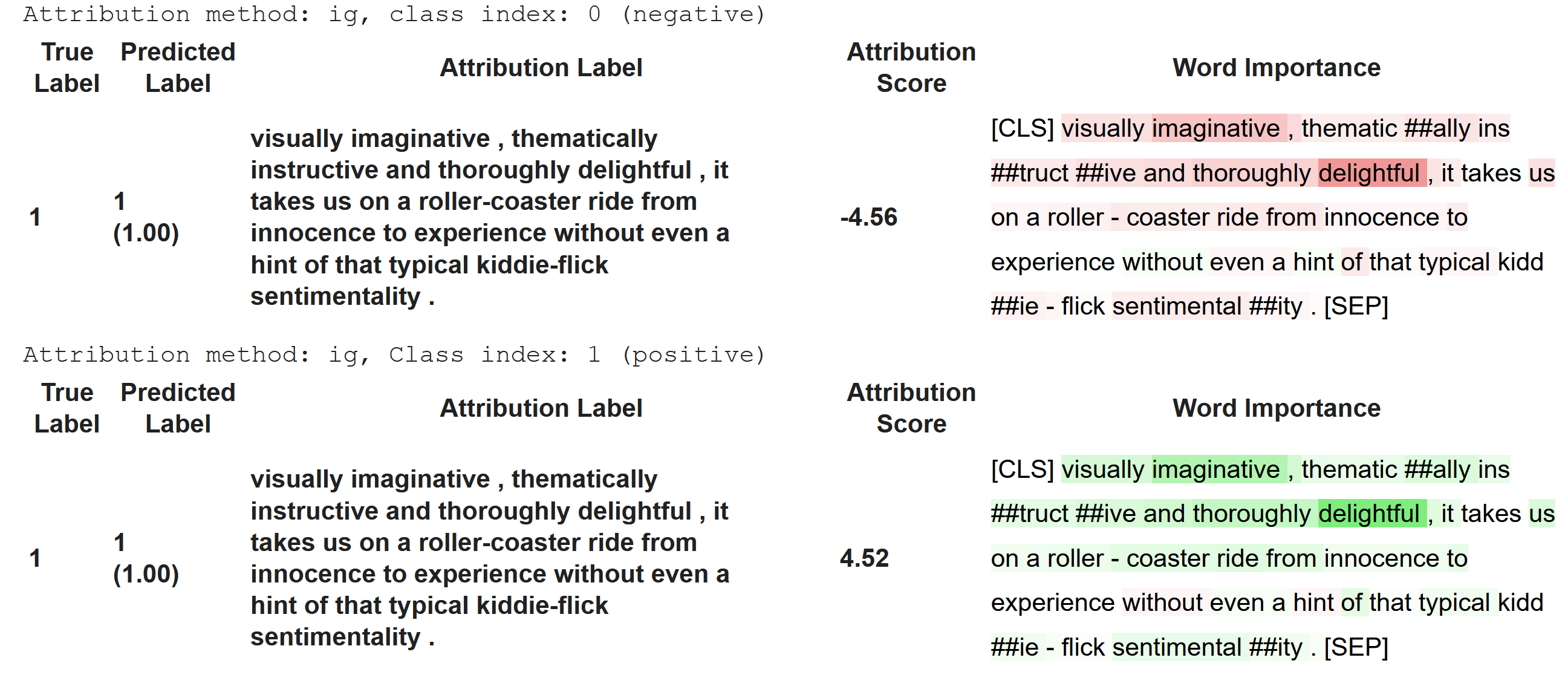

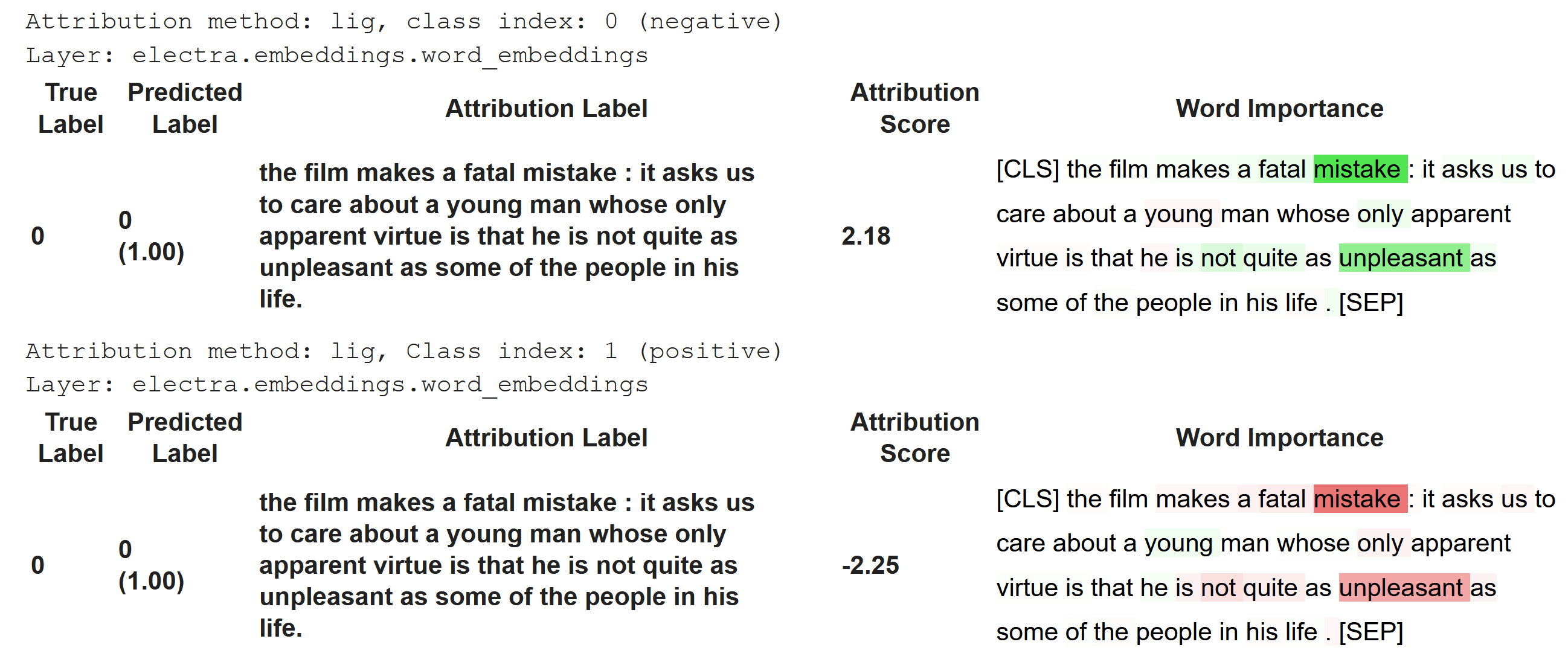

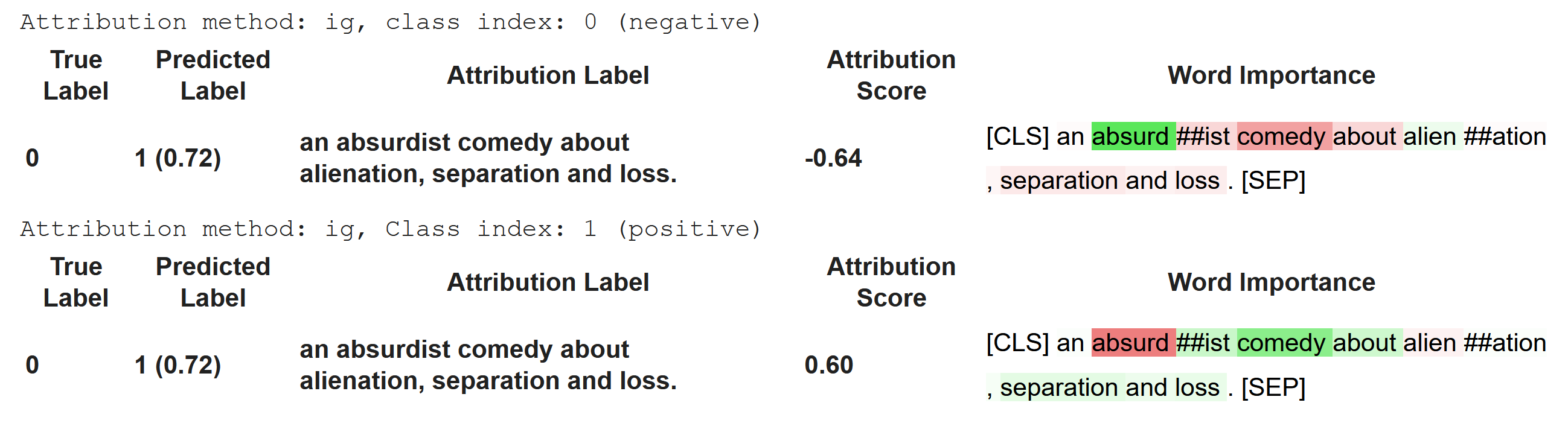

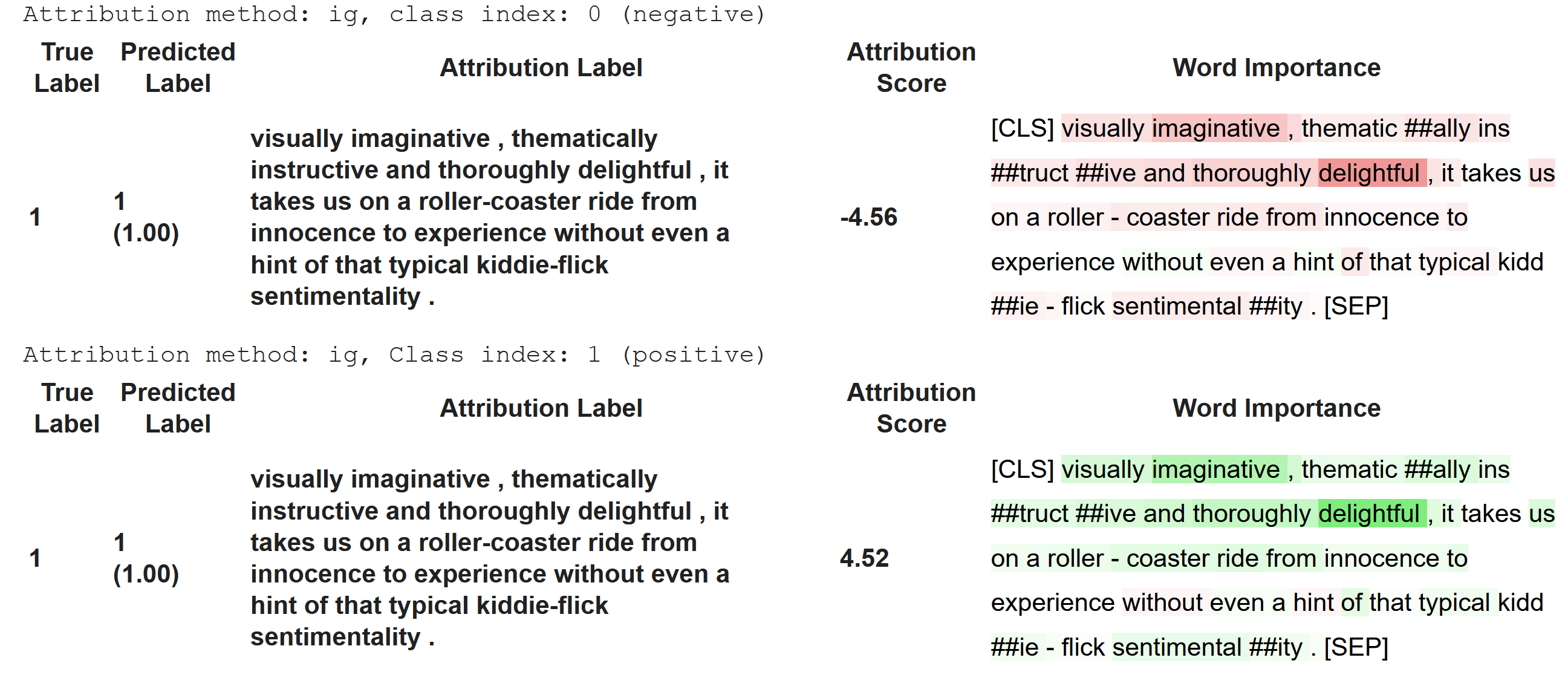

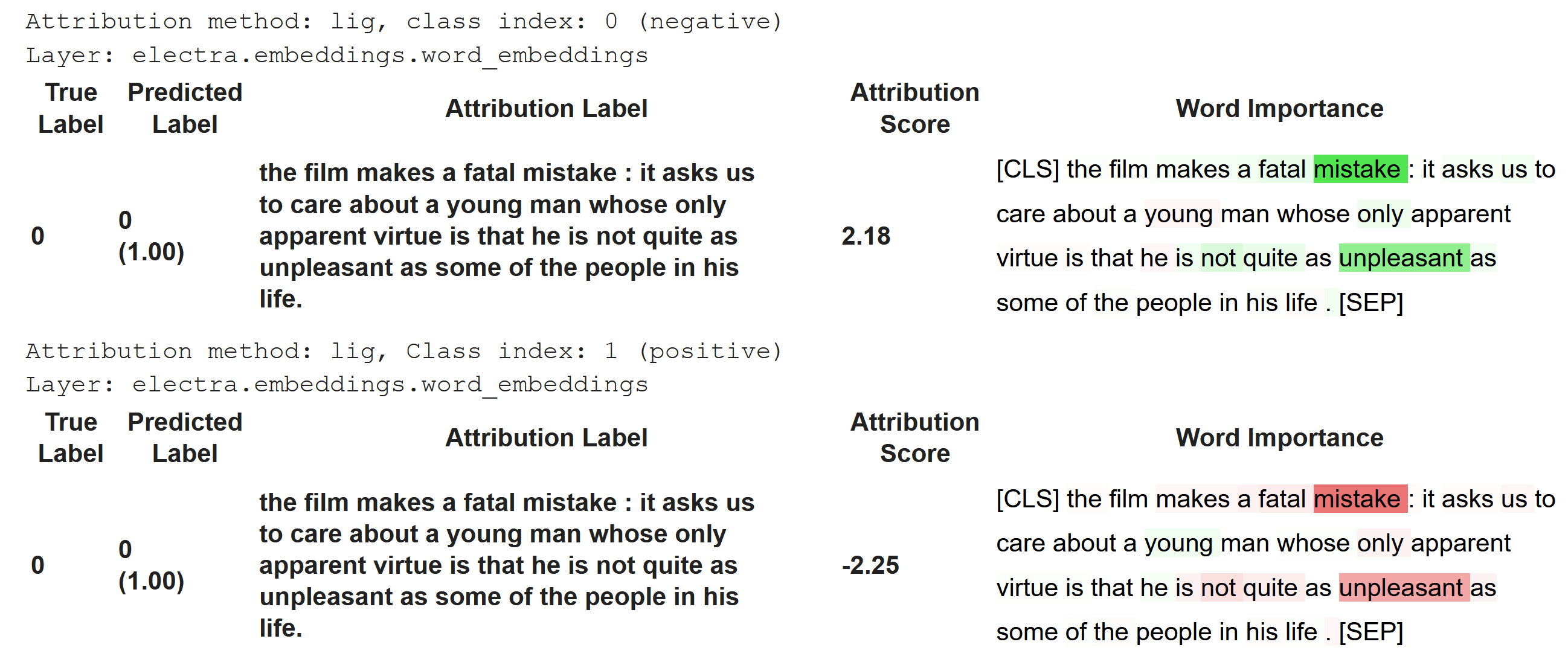

Captum visualization library shows in green tokens that push the prediction towards the target class. Those driving the score towards the reference value are marked in red. As a result, words perceived as positive will appear in green if attribution is performed against class 1 (positive) but will be highlighted in red with an attribution targeting class 0 (negative).

Because importance scores ar assigned to tokens, not words, some examples may show that attribution is highly dependent on tokenization.

Attributions for a correctly classified positive example

Attributions for a correctly classified negative example

Attributions for a negative sample misclassified as positive