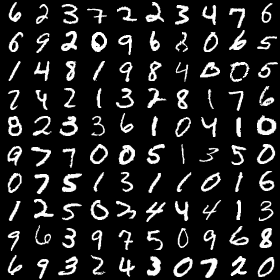

Alireza Makhzani, Jonathon Shlens, Navdeep Jaitly, and Ian J. Goodfellow. 2015. Adversarial Autoencoders. CoRRabs/1511.05644 (2015). Figure 3 from the paper.

A Tensorflow 2.0 implementation of Adversarial Autoencoder (ICLR 2016)

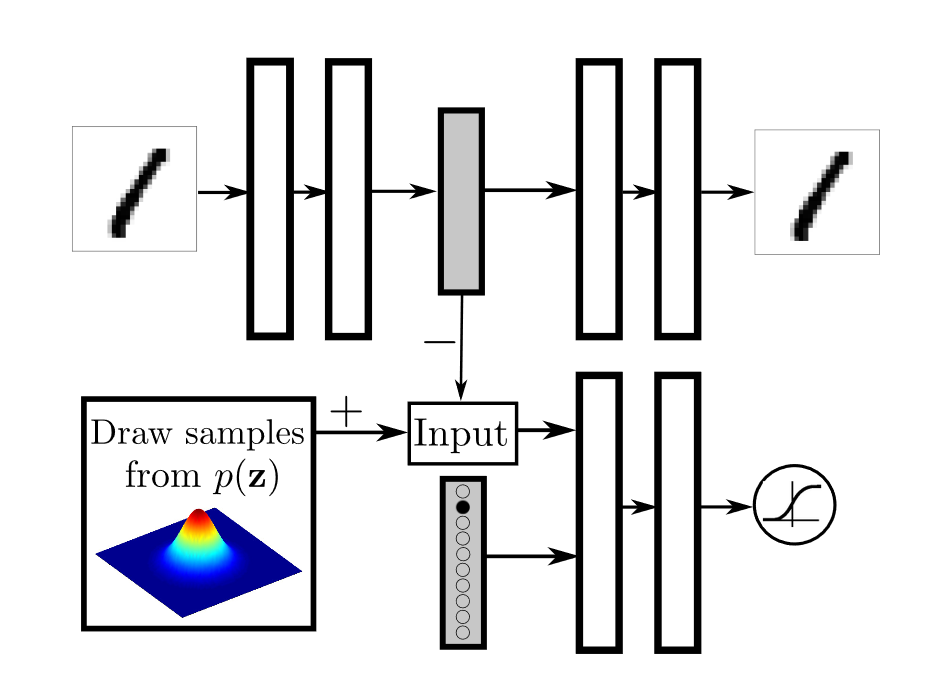

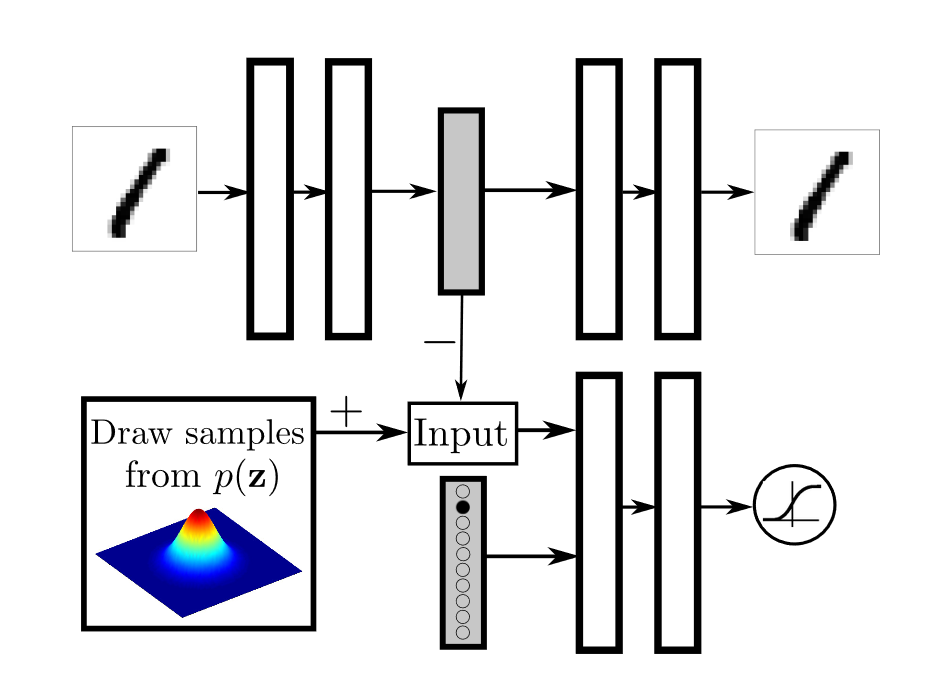

| Architecture | Description |

|---|---|

|

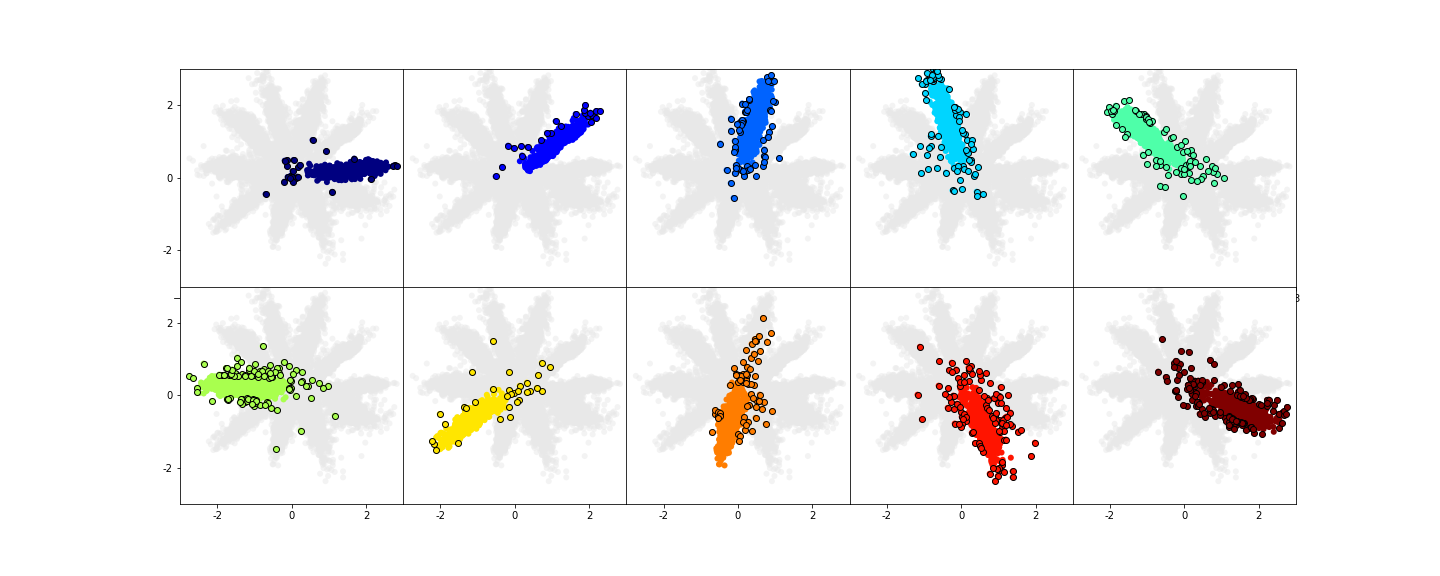

Regularization of the hidden code by incorporationg full label information (Fig.3 from the paper). Alireza Makhzani, Jonathon Shlens, Navdeep Jaitly, and Ian J. Goodfellow. 2015. Adversarial Autoencoders. CoRRabs/1511.05644 (2015). Figure 3 from the paper. |

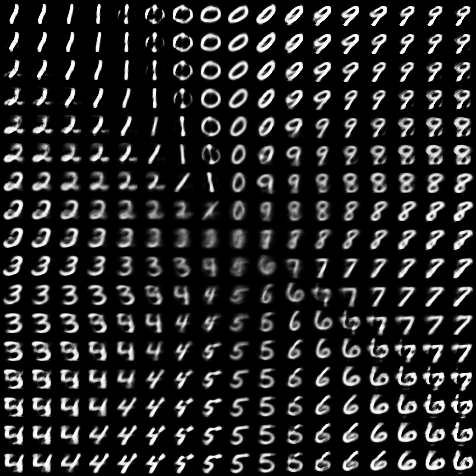

gaussian_mixture prior| Target prior distribution | Learnt latent space | Sampled decoder ouput |

|---|---|---|

|

|

|

| Input images | Reconstructed images |

|---|---|

|

|

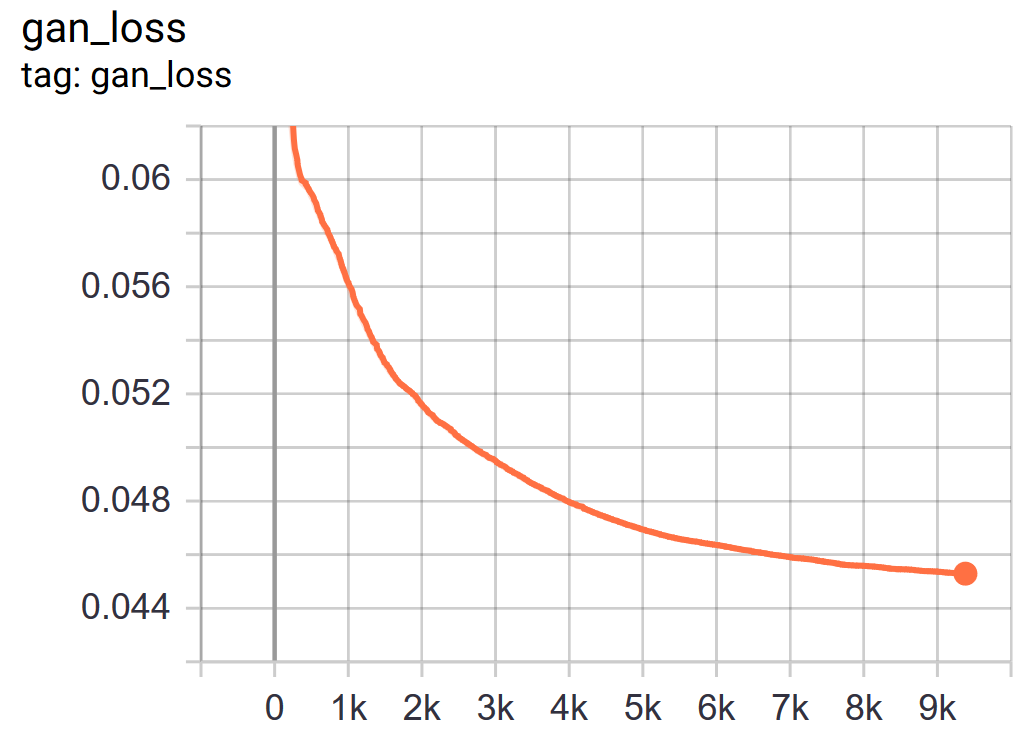

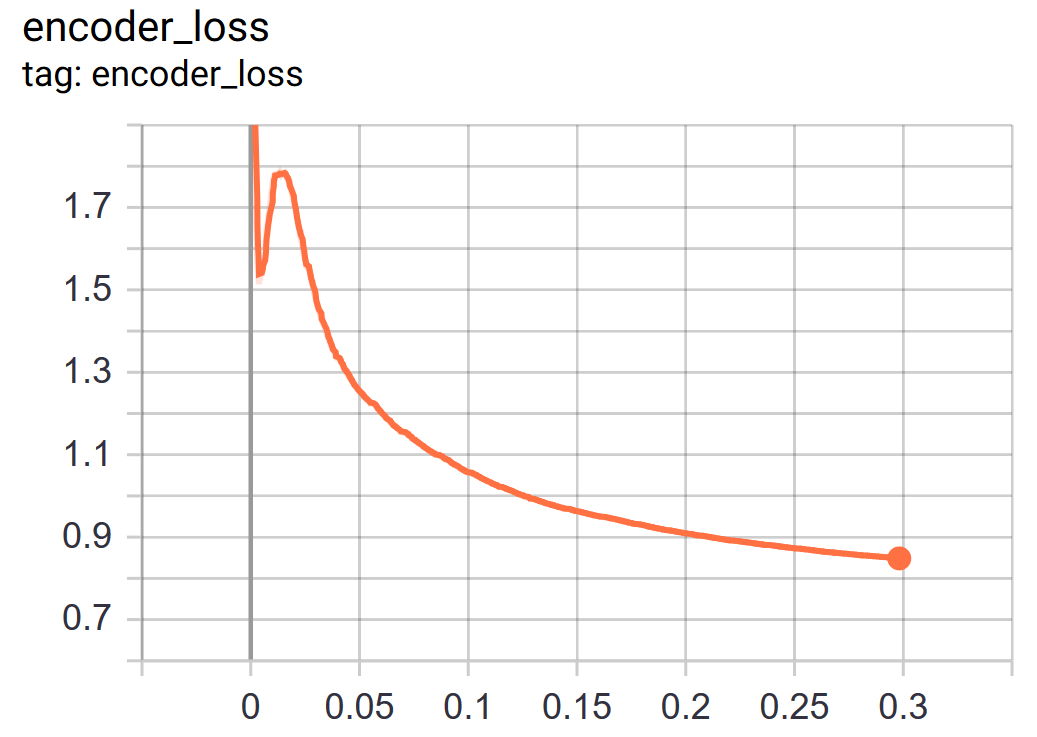

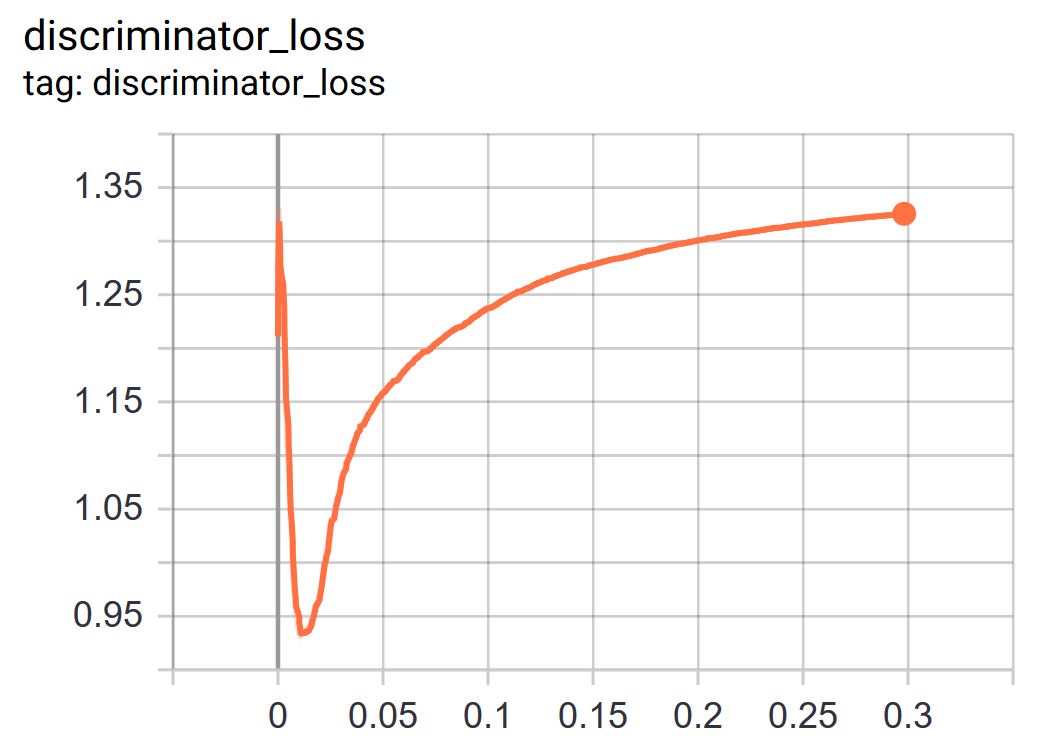

| Gan | Encoder | Discriminator |

|---|---|---|

|

|

|

python train_model.py --prior_type gaussian_mixture

--prior_type: Type of target prior distribution. Default: gaussian_mixture. Required.--results_dir: Training visualization directory. Default: results. Created if non-existent.--log_dir: Log directory (Tensorboard). Default: logs. Created if non-existent.--gm_x_stddev: Gaussian mixture prior: standard deviation for the x coord. Default: 0.5--gm_y_stddev: Gaussian mixture prior: standard deviation for the y coord. Default: 0.1--n_epochs: Number of epochs. Default: 20--learning_rate: Learning rate. Default: 0.001--batch_size: Batch size. Default: 128--num_classes: Number of classes (for further use). Default: 10Visualization of outliers from learnt distribution in the latent space